HTTP/2 and Subresource Integrity don't always get on

I recently wrote a couple of blog posts about the WebPageTest waterfall chart, one on this very blog here, and another on this years PerfPlanet 2019. I’ve learnt lots while writing them and also had some really great feedback and questions. One question in particular by @IacobanIulia really opened a can of worms. What initially I thought was a simple case of Chrome stair-stepping turned out to be something a little more complicated. First a little bit of context about the waterfall chart in question.

The Waterfall

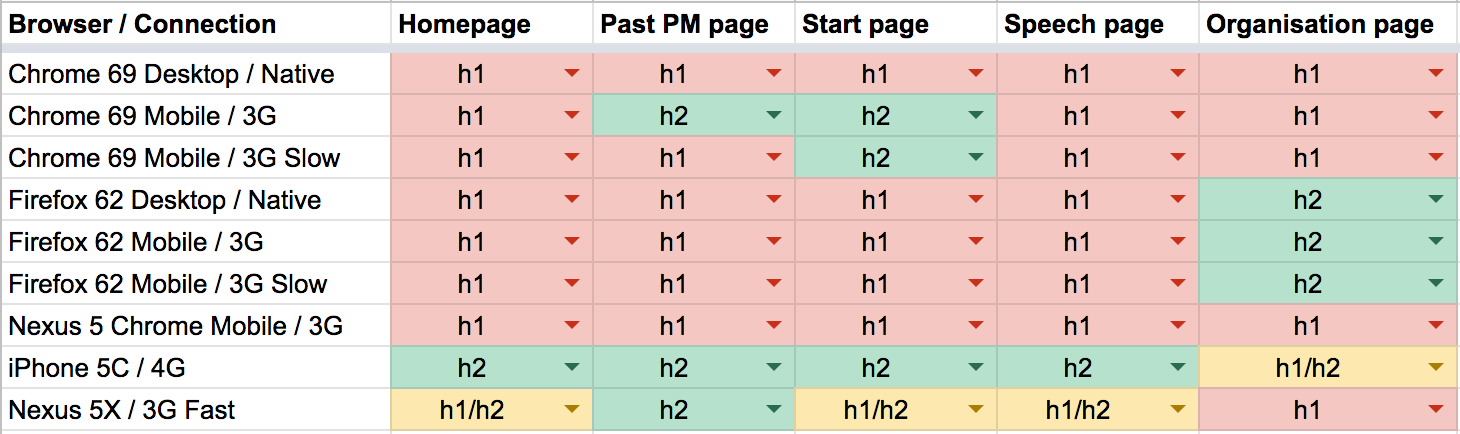

Back in November 2018 we (at GDS) were trialing the use of HTTP/2 on GOV.UK. According to quite a few sources, enabling HTTP/2 should improve web performance for users by introducing technology like multiplexed streams, HPACK header compression and stream prioritisation. But at GDS we try not to enable features blindly and accept them at face value, so I decided to setup a set of tests to see if enabling HTTP/2 did actually improve performance. I won’t go into all the (synthetic) test details here, but from testing 5 different page types on multiple devices, browsers and connections, it was found that it actually made performance worse in many cases. Sometimes only by a few 100 ms, other times by 10 seconds+ on a 2G connection (metrics examined: First visual change, Visually complete 95%, Last visual change, Speed index, load time). Not great, so I’m glad I tested! Summary of results from the tests can be seen below:

This was quite unexpected and I spent the next few days trying to work out what was going on, and why performance actually seemed worse. It was very easy to see that HTTP/2 was enabled as the HPACK header compression was working:

HTTP/1.1

h2load https://www.gov.uk -n 4 --h1 | tail -6 | head -1

traffic: 117.22KB (120036) total, 2.45KB (2504) headers (space savings 0.00%), 114.36KB (117104) data

HTTP/2

h2load https://www.gov.uk -n 4 | tail -6 | head -1

traffic: 115.28KB (118042) total, 793B (793) headers (space savings 67.82%), 114.36KB (117104) data

68% saving in header data over H2 compared to H1. Another easy confirmation was the shape of the resulting waterfall graphs were totally different. So it was enabled and the browsers were seeing the difference.

The performance issue

In many cases a lot of the tests were only slower by around 100-200ms. It doesn’t sound like much, but that’s quite a lot of time in the web performance world. Especially considering all we had done was enabled HTTP/2 on the CDN. It all boiled down to the fact that after the initial HTML download, all other page assets were being delayed (by ~100-200ms). On slower devices and connections, this short delay at the start of the waterfall was compounded, leading to a difference of many seconds by the end of the page load.

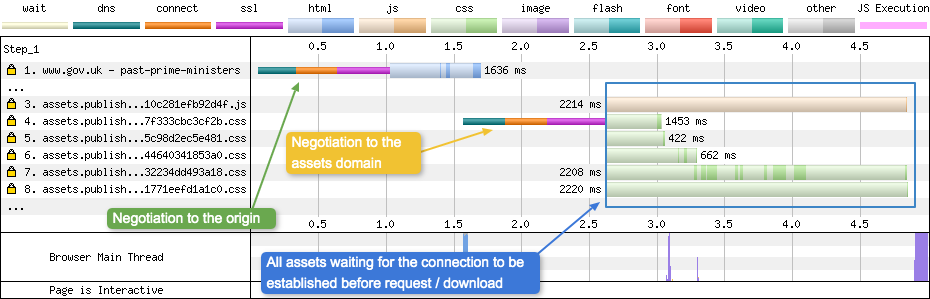

Now at the time, I put this down to the fact that we have a separate assets domain that all our static assets are served from. The DNS + Connect + SSL negotiation to this “asset” domain had become a bottleneck under HTTP/2:

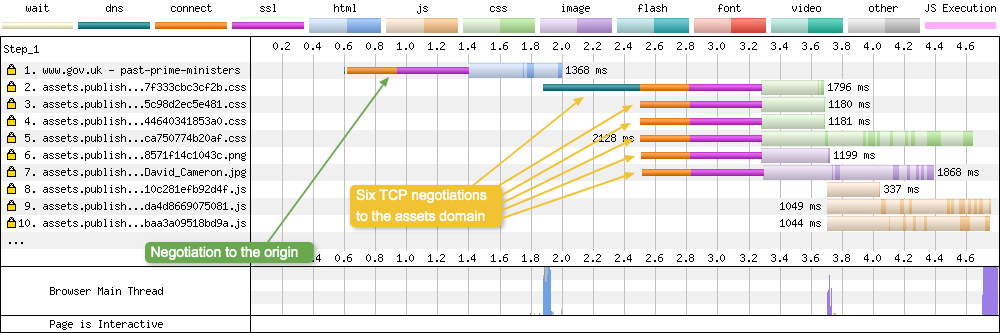

As you can see from the waterfall, none of the other assets can be requested or downloaded until that 2nd TCP connection is established. If you compare it to the HTTP/1.1 version of the waterfall, you will see the difference quite clearly:

Under HTTP/1.1, the browser opens multiple TCP connections (usually around 6) to maximise connection throughput by downloading files in parallel. It is the browser that is deciding the priority of the assets being requested. So it requests the highest priority ones first (the CSS). And since there are 6 parallel connections to choose from, it can do this very quickly.

With HTTP/2, everything is prioritised and streamed over a single TCP connection (for optimal performance). It is the server that is deciding the priority (often badly unfortunately). The browser simply requests a whole bunch of files, then the server will drip feed them to the browser in the order it thinks is best. The browser is very dependant on this single connection being negotiated quickly. Until it does, nothing can be downloaded from the domain.

In my head this was the issue that was slowing the pages down. The impact of the second domain (or shard as they are also known) needed to be minimised. So following this path we started to look into potential fixes.

1 - Preconnect header

The theory is, if you can establish a connection to the assets domain as soon as possible, this should speed up all downloads from that domain when they are actually required. The quickest way to do this is to use the preconnect header. As soon as the HTML page headers are received, the browser is given a hint that in the future it will need to connect to another domain. Example below doesn’t add the crossorigin attribute but it is an option, more on this later.

Link: <https://www.example.com>; rel=preconnect

There are two methods to tell a browser about the preconnect. Either as a header, or as link element in the HTML <head>. We choose the header method as it is easier to roll-out across the whole site, and Edge (18) only supports the header version. This will all change with the release of Edge Chromium (76).

Notice that this is only a hint. The browser may choose to ignore the hint if it already has everything under control. We tested with & without the preconnect header and it didn’t make any difference from what we could see. For more information on the preconnect header checkout ‘Improving Perceived Performance With the Link rel=preconnect HTTP Header’ by Andy Davies.

2 - HTTP/2 connection coalescing

HTTP/2 connection coalescing, in theory should allow you to have your cake and eat it when it comes to migrating from HTTP/1.1 domain sharding, to HTTP/2. A simple explanation is: if a domain and a sub-domain share similar properties (e.g. IP address, SSL certificate), then the browser need not open a second connection. It can just use the first. In our case, being as the origin and assets domain sit on the same infrastructure (CDN), there’s no reason why this can’t happen. Unfortunately browser support is flaky. We checked all the criteria we needed to meet, but saw no actual improvement in performance. You would not believe how many times I read the post ‘HTTP/2 connection coalescing’ by Daniel Stenberg, trying to figure out why we were seeing no improvement at all! In the end I assumed it simply wasn’t working due to browser support issues.

Switching HTTP/2 off

After the initial trial of around 4 weeks, and with no progress on fixing the issue to make HTTP/2 match (let alone beat) HTTP/1.1 performance, I made the decision to switch it off. There’s no reason to be slowing down some users experience of GOV.UK for the extra 7.1% that enabling HTTP/2 gives you in your Lighthouse best practices score.

The only route forward I could see was:

- Remove the assets domain completely and serve the CSS, JS, and images from the origin.

- Serve only the HTML & CSS from the origin, but leave the rest on the assets domain.

Written down, these don’t sound too bad to implement. Unfortunately when it comes to a website the size and complexity of GOV.UK, nothing is ever that simple. So a future upgrade to HTTP/2 was left for when this work could be prioritised.

The rogue image

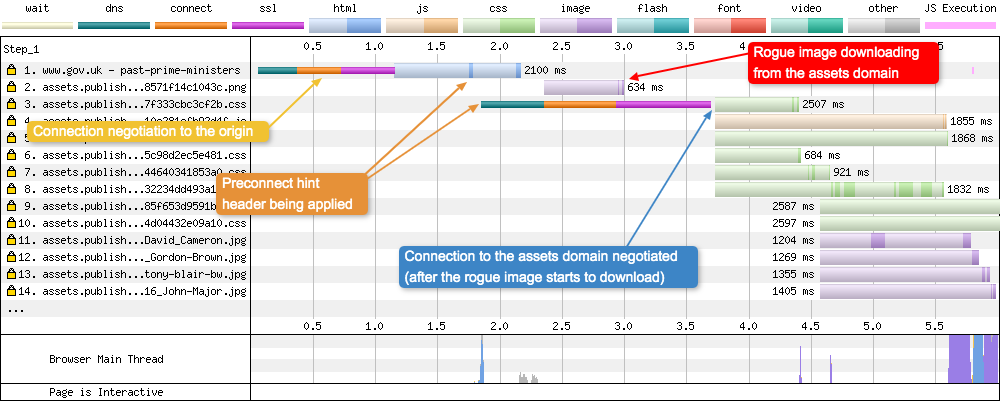

Enough context and rambling, back to the initial waterfall chart I mentioned at the start. Examining it again, there is something strange going on. If you look at request 2, there is a rogue image that is being downloaded. The reason why it’s strange is because according to the same waterfall chart, the DNS + Connect + SSL connection to the ‘assets’ domain hasn’t been negotiated yet (request 3).

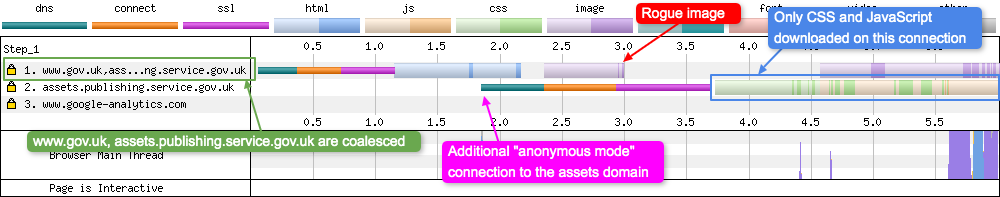

So how can that image be downloading from the assets domain before the connection to it has been established? This is where everything slots into place and it all starts to make sense. If we examine the connection view for the same test:

HTTP/2 coalescing was actually happening(!), hence why the image was downloading before the assets domain connection was established. In other words, the image was downloaded on the same connection as was used when downloading the initial HTML. The question is: why is a second connection to the asset domain actually required then? This is where Subresource Integrity(SRI) comes into play.

Subresource Integrity

Subresource Integrity is a security measure that developers can enable to make sure that assets being served from a domain are only used if they contain code that is as expected. This is useful if you have a third party script hosted on an external domain, and you want to be sure that if the script were to change (e.g. is hacked), it won’t run.

This is done by attaching an integrity attribute to the <script> tag, with a sha256, sha384, or sha512 encoded hash of the file as the value, e.g:

<script src="https://assets.publishing.service.gov.uk/static/libs/jquery/jquery-1.12.4-c731c20e2995c576b0509d3bd776f7ab64a66b95363a3b5fae9864299ee594ed.js" crossorigin="anonymous" integrity="sha256-xzHCDimVxXawUJ0713b3q2Sma5U2OjtfrphkKZ7llO0="></script>

Once downloaded, the browser will calculate the hash of the file. If it matches the hash listed in the integrity attributes value, then the script will execute. Should the third party site be hacked and our embedded script is modified, the browser calculated hash won’t match that of the integrity attribute and the script won’t be executed.

Crossorigin anonymous

But if you look closely at the above script you will see another attribute: crossorigin="anonymous". This crossorigin attribute provides support for CORS, and it defines how the element handles cross-origin requests. The value of ‘anonymous’ means that there will be no exchange of user credentials via cookies, client-side SSL certificates or HTTP authentication, unless on the same origin. The crossorigin attribute is a required security measure needed for SRI to work, and can be seen in the specification. And at this point we reach the root of the issue.

The issue

In order for the browser to download any of these SRI requests, it must first establish a TCP connection in ‘anonymous mode’, since the initial connection to the server is using a non-CORS credentialed connection. This obviously takes time, as TCP connections are expensive. While this new connection is being established, all the assets on the page are waiting for it.

What makes this even worse in GOV.UK’s case is that the CSS also has SRI enabled. The browser is desperately trying to download the CSS so it can create the render tree (CSSOM + DOM), but the delay is stopping it from doing so. Once done it can move then onto the layout stage (also known as reflow) of the page build process. This delay is a real killer, and it puts the browser on the back-foot in terms of performance right from the start.

Order of events

Lets quickly run through the order of events the browser is seeing under these conditions:

- Initial connection to the server negotiated, the HTML page downloads

- Browser sees the

preconnectin the headers and examines the domain that is referenced - Browser sees that the origin and

preconnectdomain are similar, so coalescing can occur - With this coalesced connection now ready, the first image is sent by the server

- The browser now sees that the CSS has SRI enabled, and realises it doesn’t have an ‘anonymous’ connection established to allow it to be downloaded

- Browser opens the ‘anonymous’ connection (after some delay), and all other CSS / JS assets are downloaded using it (2nd line in the connection view)

- Other non-SRI assets continue to use the initial connection

There’s another issue here for HTTP/2. TCP connections are independent of each other, so HTTP/2 prioritisation won’t work across them (as they have no knowledge of what each other is doing). For HTTP/2 prioritisation to work properly, the browser needs to be using multiplexing streams over a single TCP connection.

The impact SRI is having

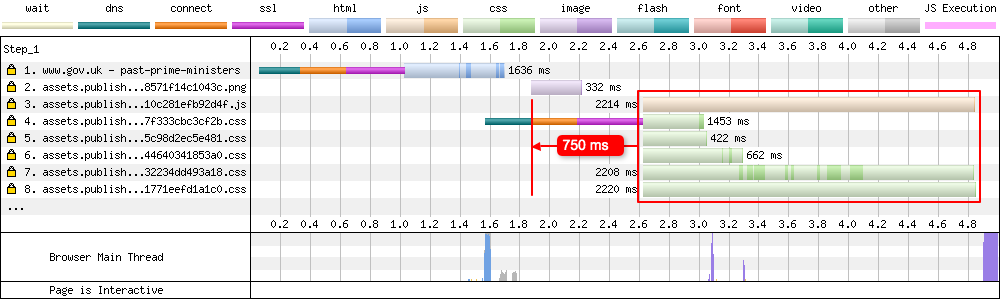

Looking at the waterfall, it is possible to estimate the impact this SRI setup is having on the page metrics under HTTP/2:

Assuming both the CSS and JS are allowed to use the initial coalesced connection, their request start time for this particular test could be brought forward by 750 ms! That’s a huge chunk of time in web performance terms. Assuming we can make similar proportional changes across all the tests I listed in the spreadsheet above, I’m sure a high percentage would suddenly turn very green and switch to ‘h2’.

Future plans

So now that this has been spotted, what next? Well, the bare minimum that needs to happen is that SRI is disabled on CSS assets (or changed to crossorigin="use-credentials" which should in theory allow us to use that already established, credentialed connection). If this happens, at least the browser can quickly create the render tree over the coalesced connection in a non-blocking fashion. By this point the preconnect domains for any ‘anonymous mode’ assets should have been negotiated in parallel, ready for it to be used when needed (e.g. with fonts).

There’s then the question of disabling SRI on JavaScript assets. Personally I’d like to see it disabled as it will allow all assets to be multiplex streamed over the single TCP connection. Since the assets domain is first party (we control it), it seems to make sense to do this. Although I’m sure many security experts may be shaking their heads and disagreeing with this. If you are, I’d love to hear why over on Twitter.

And lastly, the final option could be to remove the assets domain (shard) completely, and serve all static assets from the main domain. This would be the ideal solution, as it removes any need for the flaky HTTP/2 connection coalescing. But due to the complexity of the GOV.UK website, I don’t see this happening anytime soon.

I’ll be sure to post an update once there’s been some progress and verified it actually fixes the issue (fingers crossed!). Thanks go out to Barry Pollard and Andy Davies for their technical input, and Iulia Iacoban for the original question!

Update - January 2020

CORS throws a spanner in the works

Just when I thought everything was going to be simple, CORS rears its safety conscious head and stops you in your tracks. If you happen to be serving your assets from the other domain with the Access-Control-Allow-Origin: * header, you can’t simply swap crossorigin="anonymous" for crossorigin="use-credentials" as the browser will give you a few angry looking warning and block the request:

Cross-Origin Request Blocked: The Same Origin Policy disallows reading the remote resource at ‘https://assets.example.com/script.js’. (Reason: Credential is not supported if the CORS header ‘Access-Control-Allow-Origin’ is ‘*’).

It turns out the wildcard value doesn’t allow it to be used in this way over a credentialed connection. Attempting to use the wildcard with credentials will result in an error.

If you are interested in what the Fetch specification says, there’s some information in the table (5th row down), and it’s reiterated again in the paragraph under the table:

Access-Control-Expose-Headers,Access-Control-Allow-Methods, andAccess-Control-Allow-Headersresponse headers can only use*as value when request’s credentials mode is not “include”.

Thinking about it more (assuming I understand CORS), in using the wildcard and asking the browser to use a credentialed connection, you are essentially telling the browser to allow a script to be loaded from a cross-origin domain that can be executed by all domains (*), and you are telling the browser to use a credentialed connection. So really, it is removing the security protections that CORS is there to fix.

Back to the drawing board for the moment…